Boulder, CO – (March 18, 2019) – Black Swift Technologies (BST), a specialized engineering firm based in Boulder, CO, announced today it has completed the first phase of a NASA-funded project to demonstrate the effectiveness of fusing a host of onboard sensors to develop a terrain-following fixed-wing unmanned aircraft system (UAS), in this case, it will be demonstrated using the Black Swift S2™ UAS.

BST’s understanding and integration of artificial intelligence (AI) and machine learning can help serve as a catalyst for accelerating UAS growth and adoption, industry-wide. Through autonomous, active navigation around obstacles and over rugged terrain by a fixed-wing UAS, BST is demonstrating how technology can help make UAS operation simpler and safer, for both operators and the public.

“Our state-of-the-art sensor suite and approach to sensor fusion enables a number of capabilities not yet seen for fixed-wing UAS,” says Jack Elston, Ph.D, CEO Black Swift Technologies. “Integration of these developments with our highly capable avionics subsystem can make flying a fixed-wing small UAS significantly safer for operations in difficult terrain or beyond line of sight.”

Relevance of the Technology

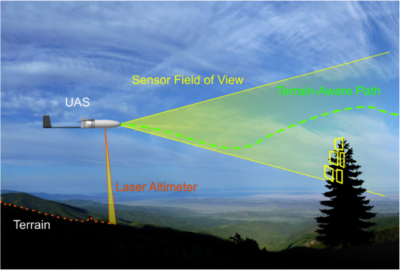

Fixed-wing aircraft can scan substantially more area in less time than their multi-rotor counterparts. Yet low altitude sensing by fixed-wing UAS is not without its challenges. Avoiding obstacles such as trees and towers, along with terrain variations that can exceed the climb capabilities of the aircraft, are some of the inhibitors to widespread use of fixed-wing aircraft for scientific and commercial data gathering operations (Figure 1).

BST’s solution fuses state-of-the art machine vision technologies with advanced sensors, including lidar and radar, into a modular subsystem enabling a fixed-wing UAS to operate safely in a variety of theaters and weather conditions.

While initial deployments will focus on fixed-wing UAS, initially the Black Swift S2, this subsystem of augmented onboard intelligence will be extended to multi-rotor UAS as well as other UAS platforms in future iterations.

Beyond Terrain Following to Obstacle Avoidance

The last few years have seen significant growth in collision avoidance technology for multi-rotor vehicles. This has not only driven the miniaturization and diversification of proximity sensing suites, but also spawned a number of technologies for providing onboard image processing and data fusion. While some of these technologies are applicable to fixed-wing collision avoidance, the relatively low speeds of average multi-rotor UAS and their ability to hover in place have generally produced shorter-range proximity sensing solutions. The dynamics and relatively high speed of larger fixed-wing UAS require much longer range sensing and predictive real-time avoidance decision processing capabilities to give the aircraft sufficient time to react.

Recent advancements in self-driving cars and advanced driver assistance systems (ADAS) for automotive applications have resulted in a variety of longer-range sensors including radars and lidars. This project fuses common vision-based techniques with both lidar and radar, enabling fixed-wing UAS data gathering flights in a wide breadth of environments.

In instances where an inflight emergency is encountered, especially when the UAS is flying beyond-visible-line-of-sight (BVLOS), remote and autonomous landings can be safely achieved leveraging the systems’ online machine vision classifiers. These classifiers can accurately and effectively identify obstacles (people, buildings, vehicles, structures, etc.) that could impede finding a viable, and safe, landing area (Figure 2). The result is an autonomous and remote landing without causing detrimental impact to people or property.

Applications

Many application areas of UAS demand the use of a vehicle able to cover a larger sampling area, such as pipeline and other infrastructure inspection, rock and mudslide monitoring, snowpack analysis, forest biofuel calculation, invasive plant species identification, trace gas emission observation over volcanoes, and missions requiring high-resolution imagery.

Using a UAS capable of carrying the necessary instruments routinely through difficult environments adds an invaluable contribution to the calibration and validation of data collected from ground- and satellite-based methods. This use of active remote sensing (sending out a signal that interacts with the environment and the resulting changed signal gets detected) allows data collected from a UAS to enhance comprehensive 3D models more effectively than traditional remote sensing methods.

In the case of volcano monitoring, low-AGL UAS flights (following the terrain of the forest canopy) enables the vehicle to directly sample gas plumes and ash clouds that are low to the ground, where the richest chemical and physical characteristics exist immediately after eruptions (Figure 3).

The use of a UAS to measure hazardous phenomena, such as wildfire smoke, eliminates the risk of harm to researchers and scientists making observations at close proximity. Utilizing UAS systems provides researchers the ability to collect desired data sets while remaining at a safe vantage point from the danger posed by the phenomena.

Catalyst for UAS Growth and Adoption

Black Swift’s system allows for active navigation around obstacles and rugged terrain by fixed-wing UAS, thus reducing adverse impact to either people or property.

By making UAS operation safer—for both operators and the public—BST’s understanding and integration of AI and machine learning can help serve as a catalyst for accelerating UAS growth and adoption, industry-wide.